Aug 21, 2024

Andrei Lebed

I have sat on many panels this year to discuss regulation in the AI space, and each and every time I leave with one overwhelming feeling; The UK is simply not doing enough.

In my view, AI is a great technology, like many technologies that we have incorporated into our daily lives in the past, but it is no more scary than that - a new exciting technology that can help us do great things. Whilst its ability to generate and understand context far outperforms other technology forms, we need to move past the hype and the fear to rationalise and regulate it.

At Koodoo we are embracing AI because we believe it will revolutionise the way we interact with customers in financial services, driving better customer outcomes across the board.

To do that well, we need to treat AI like any other technology, by methodically and carefully regulating and controlling it. I believe the FCA is more concerned with the end results (e.g. consumer outcomes) than the specific tech used, and will require evidence to show the AI is consistently reviewed and ensured to meet regulatory standards and compliance (in the same way that you need to prove you are working to delivering fair customer outcomes).

Side notes:

For an interesting read, the Financial Conduct Authority released an AI update recently which I very much recommend you read: https://www.fca.org.uk/publication/corporate/ai-update.pdf

2. You can also read the UK AI bill that Sir Chris Holmes Lord Holmes is presenting to the House of Lords here: https://bills.parliament.uk/bills/3519

However, even though my above opinion may sound convincing, I know that a lack of regulatory advice continues to cause companies to hesitate when embracing AI in their businesses. This is why the UK needs to provide more guidance and (at the very least) outline risk frameworks for AI implementation.

Without clear guidelines, not only are businesses hesitant to embrace AI, but the UK risks stifling innovation and missing a significant opportunity to position itself as a leader in AI, particularly in sectors where it already excels, such as fintech, professional services, and legal services.

This is why at Koodoo we’ve been keenly analysing the EU AI Act. As other countries and regulatory bodies are likely to follow suit with their own AI regulations, this Act serves as an essential starting point to understand how future regulation might look.

Read our thoughts below:

(Quick disclaimer: Koodoo operates in the UK. The author is also not a legal expert and this is just an opinion on what is interesting about the EU AI Act!)

So, what’s interesting about the EU AI Act:

A Risk-based framework for regulating AI usage

🔍 What’s Happening? The EU AI Act officially entered into force on August 1st, marking a significant milestone in global AI regulation. The first mandatory enforcement kicks in on February 2, 2025. From that date, any prohibited use of AI under the Act must be ceased or face enforcement.

The EU AI Act categorises AI systems by risk, focusing on their impact on individual rights and society. While these regulations don’t directly apply to UK businesses, they provide valuable insights into how AI might be regulated globally. Understanding these categories can help UK financial services anticipate similar regulations from the UK or other international markets. By staying ahead of the curve, businesses can better manage the risks associated with AI and ensure they remain compliant and competitive in a rapidly evolving landscape.

Have a read through the risk levels in the EU AI Act below, and why I found them interesting!

Risk Framework under the EU AI Act

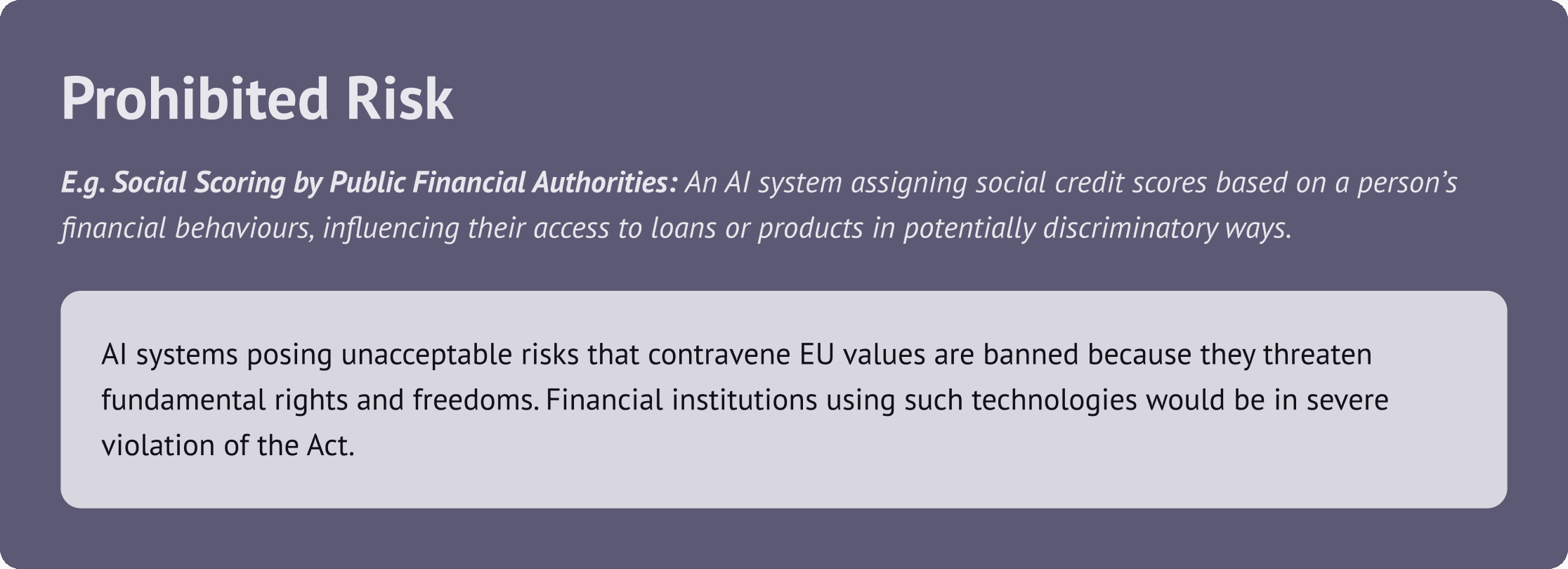

Prohibited AI Practices

AI systems that violate EU values, such as those exploiting vulnerabilities, manipulating behaviour, or involving social scoring by public authorities, are strictly banned.

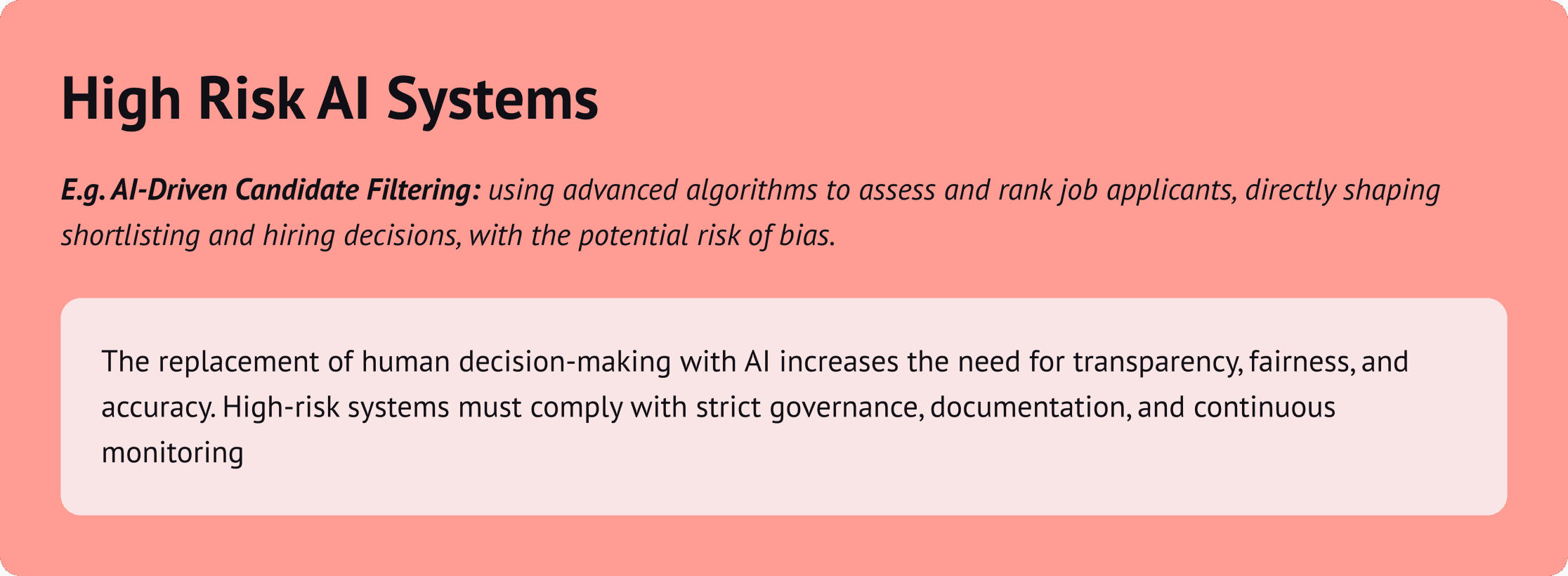

High-Risk AI Systems

These systems directly impact fundamental rights, safety, or societal well-being, or operate in critical sectors including law enforcement, migration, infrastructure, education, employment, access to essential services, and justice.

There are exceptions where applications in these eight critical sectors would not be deemed high-risk; however, an AI system in these areas is always considered high-risk if it performs profiling of natural persons, regardless of other mitigating factors.

Limited-Risk AI Systems

These systems pose moderate risks and have transparency obligations for certain applications, often due to the potential for impersonation or deception in user interactions.

Minimal-Risk AI Systems:

AI tools for routine tasks with minimal impact on rights or safety are considered low-risk, and compliance with voluntary codes of conduct is encouraged.

You might be wondering… certain automated processes have been around for some time, so why are they still considered high-risk?

AI in finance now taps into diverse data sources like online behaviour and utility payments. While this boosts accuracy and fairness, it also brings challenges, such as the risk of AI generating biased outcomes, hallucinating incorrect information, or making flawed decisions based on its training data. These risks highlight the need for thoughtful regulation – something the EU AI Act aims to address.

Although the EU AI Act doesn't directly impact the UK, it offers valuable insights into where AI requires more oversight. By adopting these principles, UK businesses can gain greater confidence in their AI strategies. Identifying high-risk areas and implementing the right controls are essential steps to ensuring AI is used safely and effectively. This not only helps businesses stay ahead of potential UK regulations but also builds the trust needed to embrace AI fully.

So, what’s the takeaway? The EU AI Act provides a clear framework for where AI needs careful monitoring. By considering this as a benchmark, UK companies can use this as a useful guide when thinking about how to integrate AI into their operations, ensuring they protect customers while preparing for a future where AI is a cornerstone of financial services.

Putting things into practice at Koodoo

At Koodoo, we view AI as a transformative tool for financial services, enhancing efficiency and customer outcomes. To realise these benefits responsibly, we've developed Koodoo Evaluate – a tool that transcribes and analyses both manual and automated customer interactions, applying criteria like vulnerability assessments and compliance standards. This approach helps us maintain the highest standards of oversight in our AI-driven interactions.

We believe that AI should be both powerful and ethical. By combining innovation with rigorous compliance, we aim to harness AI’s full potential while ensuring that our solutions remain trustworthy, responsible, and beneficial for both businesses and their customers.

What to do next?

Push for Regulatory Alignment: Advocate for the UK to develop AI regulations that keep pace with technology development and global standards.

Proactively Assess AI Risks: Use the risk framework as a useful guide to critically consider the impact and potential risks of your AI use cases.

Enhance Transparency and Accountability: Ensure applied AI-driven processes are transparent and accountable, with clear documentation and ethical oversight.

Stay Informed and Engage: Regularly monitor regulatory developments and engage with field experts to stay ahead of changes and influence the direction of AI governance.

✅ Follow Koodoo on LinkedIn today for GenAI insights and real-world applications in financial services.